Google has been slowly changing how it talks about ranking algorithms.

Although Google hasn’t said anything actually new to sharp-eyed industry watchers, it is important to shine a light on what has been subtly said, formalize it and apply it to digital marketing.

Information satisfaction and helpful people-first content

The core concept we should be focusing on is information satisfaction (IS). It is not new and has been hiding in plain sight for a long time.

What is information satisfaction?

Information satisfaction is an important evaluation metric when assessing the quality of a system in how it meets the needs of its users.

When it comes to SEO, the system is an information retrieval system (i.e., a search engine). Still, IS is used in many other systems and scenarios, such as to assess content management systems and cancer patient experiences.

A search engine’s results pages would have good IS scores if the search features and the web pages shown as the results, individually and collectively, meet the searcher’s needs.

To understand the role of IS in organic search, we have two questions to tackle:

- Why and how does Google care about IS?

- What can businesses do about it?

What Google has been saying about IS

Here, I’ll focus only on the more recent communications from Google, although the word “satisfaction” has come up in the industry occasionally for well over a decade.

Since before the Panda algorithm, Google has been talking about ranking algorithms designed to rank “more original, helpful content written by people, for people.”

Satisfaction is the key concept Google uses to describe the helpful content ranking system, stating the goal is to “reward content where visitors feel they’ve had a satisfying experience.”

Later in that document, Google says the helpful content update “classifier process is entirely automated, using a machine-learning model.”

To rephrase this in a simpler way (and speculate a touch), this means that the machine learning model is:

- Constantly running in the background.

- And regularly/constantly being updated/re-tuned to take into account changes from page updates and new pages being published.

This goes a long way to explain why recovering from a helpful content demotion could take several months.

In October 2023, the concept of information satisfaction was central to Pandu Nayak’s testimony in Google’s antitrust case in the U.S., being described in different ways as the key top-level metric of the whole SERP that Google optimizes for.

The testimony in its entirety is really worth reading in detail, and I’d like to highlight just three very clear excerpts to illustrate my point:

- Page 6428, in the heart of a discussion about ranking experiments:

Q: So IS is Google’s primary top level measure of quality, right?

A: Yes. - Page 6432 when talking about RankBrain:

Q: And then it’s [RankBrain] fine-tuned on IS data?

A: That is correct. - Page 6448, when talking about RankEmbed BERT:

Q: And then it’s [RankEmbed BERT] fine-tuned on human IS rater data?

A: Yes, it is.

This means that the IS score is the ultimate arbitrator of Google search quality overall and for many algorithms, regardless of which signals the algorithms look at.

For example, it doesn’t matter how RankBrain actually works: it’s being judged and fine-tuned by IS scores. That’s really the fundamental SEO takeaway from that testimony. (Also, DeepRank has already taken on more of RankBrain’s capability.)

Another place satisfaction as a metric comes up is in the Search Quality Rater Guidelines. Let’s go back to the 2017 version. They describe high-quality pages as having:

- “A Satisfying Amount of High Quality Main Content” (section 4.2).

- “Clear and Satisfying Website Information” (section 4.3).

Since then, all raters’ guidelines have kept expanding and explaining this in more detail.

Indeed, in section 0.0 of the current version, Google states, “diversity in search results is essential to satisfy the diversity of people who use search.” This is given more nuance immediately after when Google talks about “authoritative and trustworthy information.”

The idea of information satisfaction is also core to rating the page quality (section 3.1 of the latest version), with Google stating that when thinking about the quality of the MC (main content), the rater should “Consider the extent to which the MC is satisfying and helps the page achieve its purpose.” Information satisfaction comes up many times later.

Even more recently, in Google’s 2023 Q4 results call at the end of January 2024 (the full recording), Google’s CEO Sundar Pichai said, “We are improving satisfaction,” when talking about Search Generative Experience’s ability to answer a wider range of queries for Google. I could go on.

In short, information satisfaction is the key search quality metric for Google. For information retrieval geeks, seeing IS being a key metric may not be surprising. Businesses and content creators, in general, though, may need a bit more help.

Applying IS to SEO and digital marketing

For the longest time, I’ve been saying that SEO is actually product management.

This gets the audience to start thinking about SEO in terms of the user journey and how every user interaction with the business is part of the user’s journey and part of the user experience.

Websites tend to be the central hub of a brand’s online presence, so how the website satisfies the user is critical for happy customers and good Google rankings.

When taking on a new client, one of the first questions I ask is about their customers’ user personas and customer journeys, as applying IS thinking in practice really depends on how sophisticated the client’s user engagement operation is.

When an organization’s user engagement processes are rudimentary, embedding IS thinking into the content creation, website operation and marketing activities requires a sustained effort to create from scratch or fundamentally change existing internal processes.

On the other extreme, organizations with good systems in place to engage customers document these engagements in a format useful for other teams and where the different specialist teams collaborate are likely already satisfying users anyway.

Regardless of where your organization sits on this spectrum, embedding IS thinking requires two things:

- A good understanding of the customer journeys, as they are the essential framework you need for content creation and maintenance.

- A way to assess information at each step of each journey.

Customer journeys are the framework

Firstly, you need to have a very good understanding of the different users (approximated with user personas) and each step of the customer journey for each user persona.

Then, you can use that to derive how to best satisfy the user at each step of the journey, at that specific point in time and place.

Let’s parse this out a bit. When looking at an individual customer journey, it needs to be:

- Detailed, describing at each step the user, their context, their mental state, their influences and influencers and a whole slew of other aspects.

- Constantly updated to reflect changes in the market or user behaviors and to reflect the organization’s latest understanding of its customers. It’s best to think of the customer journey as the current best approximation of what the organization knows about its customers.

Problems with the customer journey are the most common issues in my experience applying IS thinking in client engagements.

Firstly, no organization has just one type of customer, and there may be multiple personas for a given type of customer. This means that, in reality, we’re really talking about multiple customer journeys.

The other problem I see often is that customer journeys are thought of linearly as a clean series of steps or stages that users follow sequentially as documented when, in reality, human behavior and psychology are much messier.

Furthermore, a bad side-effect of thinking linearly is you may not focus on post-conversion actions by the customer as steps in the journey in their own right. This includes user retention, customer support or customers becoming promoters of your brand/services/products.

In reality, these post-conversion actions mean that the user journey actually has a loop with multiple branches. One of the best illustrations of this comes from McKinsey, who called it the loyalty loop.

Measuring information satisfaction

With the customer journeys in hand, the job of the content and SEO teams is to satisfy the searches the user does at each step of the journey.

Google is always discovering and inventing new signals to measure satisfaction, and we outsiders need not worry (nor care) about how Google goes about this task. We can do an even better job because we know our customers better! And if we’re stuck, we know who to ask.

The first step is to (re)frame the thinking behind keyword research: When IS is the goal, a query typed in Google is the public manifestation of a problem users have at that point in the customer journey.

Satisfying the user now means satisfying their information need or helping them complete the task they are looking to satisfy.

SEOs have long talked about query intent. That’s a good step in the right direction to think about IS, but you need to get a lot deeper.

By thinking about a given query at a specific step in the customer journey, how you interpret this query may be different from how it is interpreted if you’re thinking about the query in the context of another step in the same user’s journey.

Yes, it’s very common to find the same query being used at different points in the customer journey and the query would likely need to be satisfied differently at each step.

With this mental model, we get to how to actually measure information satisfaction.

The idea of IS is decades old, and there is a lot of research about how to best do it in different settings.

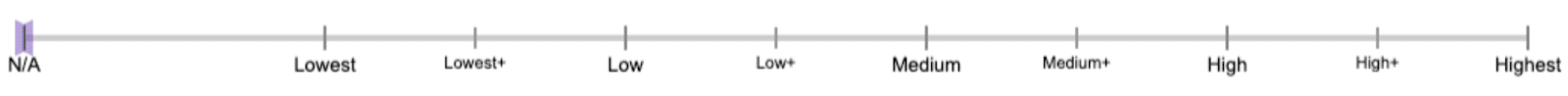

At the risk of oversimplifying the depth of research, an important tool in measuring IS is a survey. Most IS survey questions are in a Likert scale format, which is a sliding scale from a negative rating to a positive rating.

Anyone familiar with the General Guidelines of the Search Quality Rating Program will immediately recognize this format. This is the screenshot of the page quality (PQ) rating task shown in the current guidelines:

That’s a typical Likert scale format question in an information satisfaction questionnaire.

This was confirmed by Nayak in his testimony. For example, on page 6425 (lines labeled A are the answers by Nayak), in the middle of a discussion about algo changes experiments:

- A. We look at all the results for these 15,000 queries.

- Q. OK.

- A. And we get them rated by our raters.

- Q. So human raters are looking at these?

- A. At all of them and they provide ratings. So, you get an IS score for the query set as a whole.

- Q. For the set?

- A. Yeah, you get an IS score for that (continues…)

This means that to assess the IS score of a page the way Google does it, just create a process that follows the guidelines.

Google is literally telling us that Google is a satisfaction engine and, therefore, SEO is actually satisfaction engine optimization.

from Search Engine Land https://ift.tt/CWUymwb

via IFTTT

No comments:

Post a Comment